SnapHunt Bingo - A real life version of GeoBingo.io

SnapHunt Bingo - The idea and our plans

This is the first part of a three part blog post about the game project "SnapHunt Bingo" which im developing with three other university students as part of our obligatory software project.

Bingo, the old peoples game? Who wants to play that?

Well, let me introduce you to our new innovative game that isn’t played in a retirement home but outside in the real world. Yes, you have to leave your gaming chair but it will be an adventure you won’t forget for a long time.

This is a social game, so you need some friends to enjoy it. Theoretically you can play with just two players, but it’s more fun to set off in groups of two - so four or more players is recommended.

This is also a mobile game: You need a smartphone running the app or the web version.

But what’s all this about?

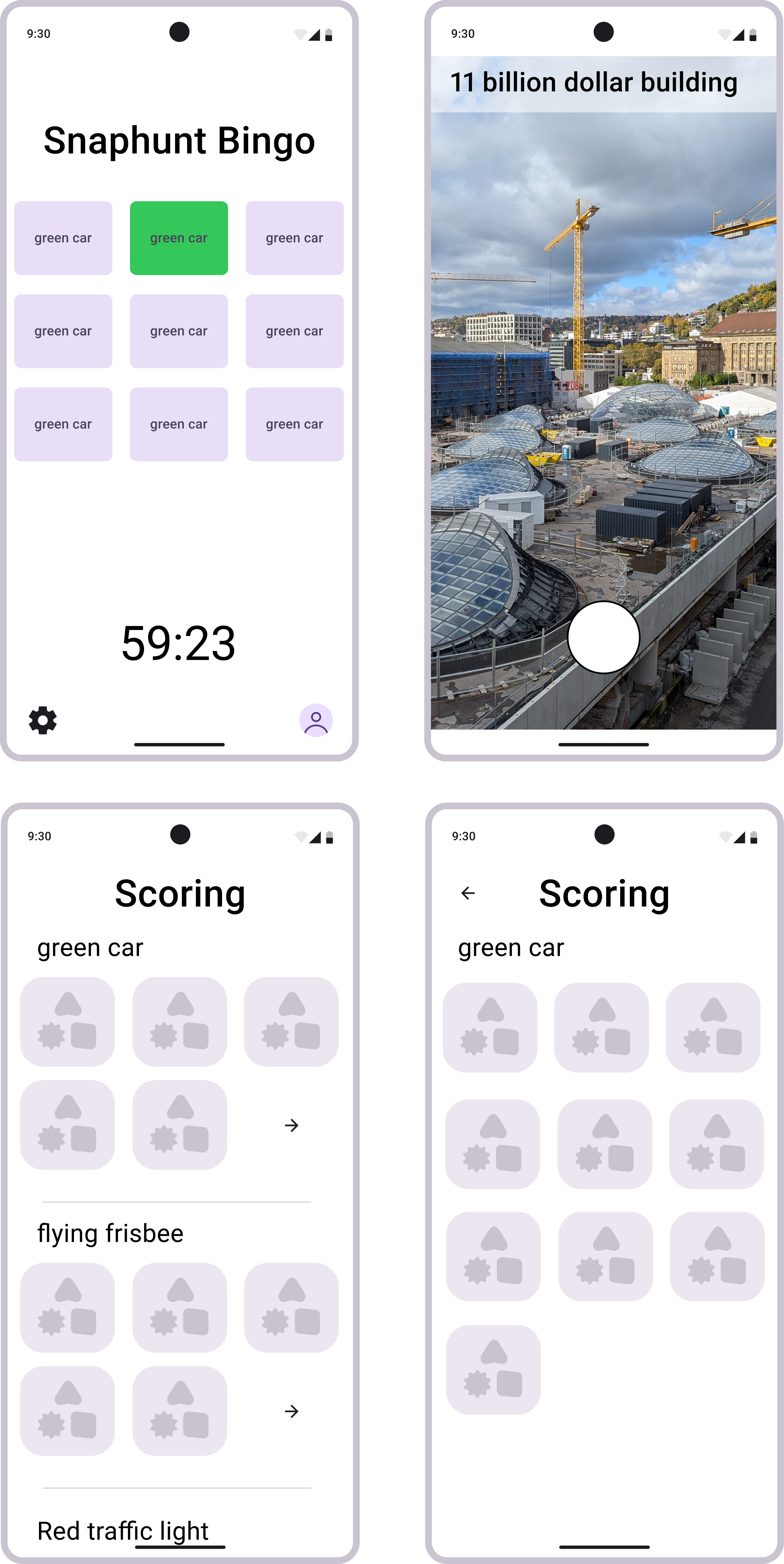

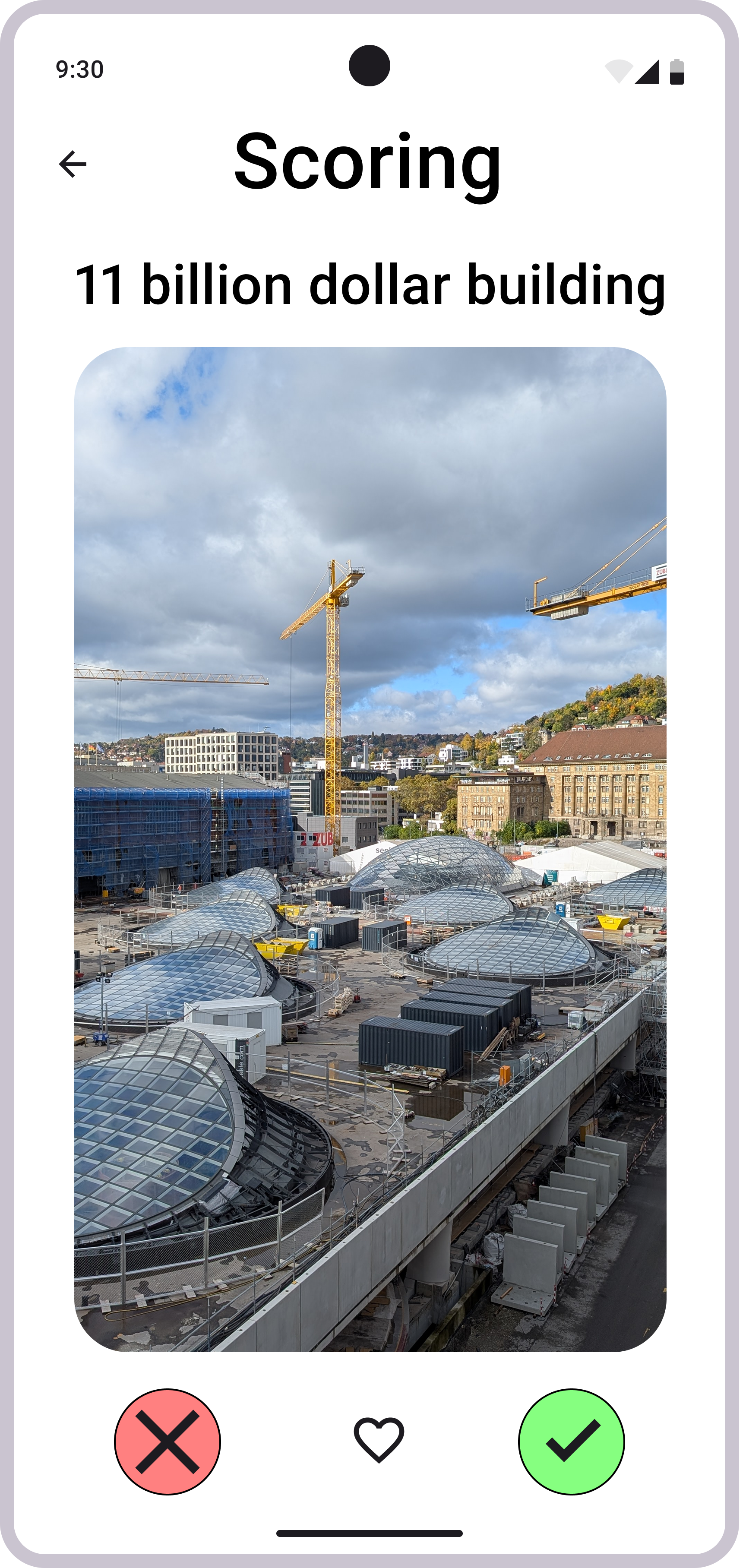

To summarize it: You have to find things from your bingo card in the real life and take a picture of it. These things can be anything you can see or grasp in the real world for example a pink car, a burning candle, a rabbit, a helicopter, yellow road markings, an olive, a disused fridge or flying frisbee. You as a group can either come up with your own words or use a list of predefined words (or a mix of both). All players have the same set of words, but they are arranged differently on the bingo boards. Once you took a picture the word is checked off and if you get a whole row you get bonus points. At the end of each game all players get together to discuss the pics they hunted. You might argue if the car is really pink or rather purple or if the racoon is in fact a cat. To help with filtering all the pictures we might add an AI integration that analyzes the easily recognizable images.

Inspiration

To be clear upfront: We didn’t invent this game. The first version of this type of game was “GeoBingo” which became popular through twitch streamers. It is not played in real life but in Google Street View. You agree on a list of words and search for them in the whole world by skimming through Google Street View imagery. You can play this game on https://geobingo.io/ but it costs money since Google Street View API costs are rather high. Since this game is open source you can self host it and use your own API key (which is free if you don’t spend every second of your free time playing this game).

Next up some streamers came up with the idea to bring this game into real life. It looked like a fun activity for a group of friends so me and some friends tried it out. But it was a hassle to keep track of the words and all the pictures. So we decided we need a proper mobile application for this task. This is how SnapHunt Bingo was born.

Design

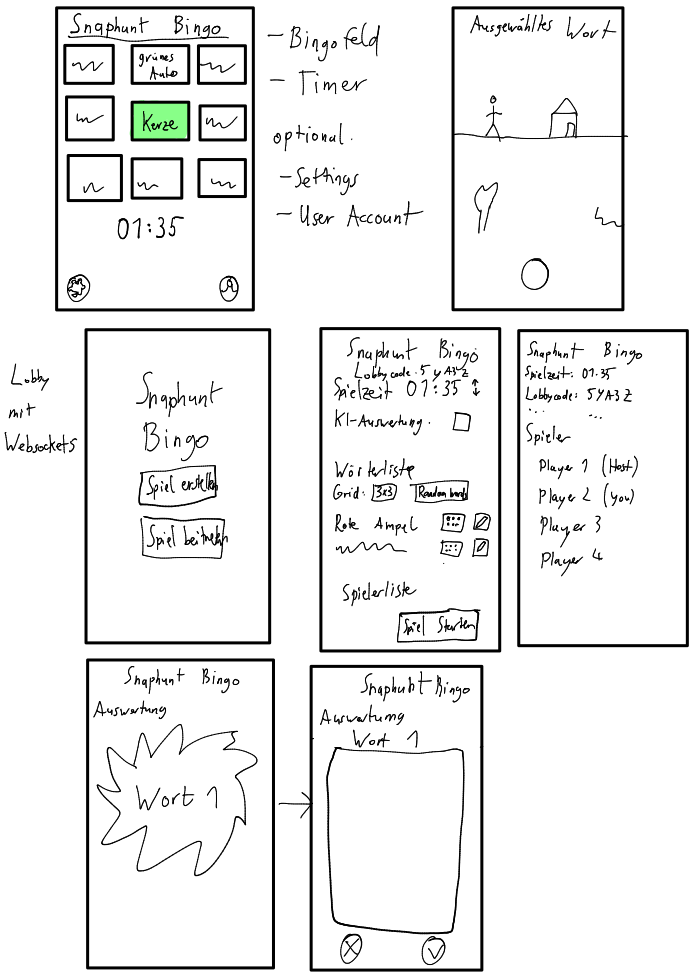

I already had some ideas how the game flow should be like and how the app’s pages should look like so I scribbled some concepts:

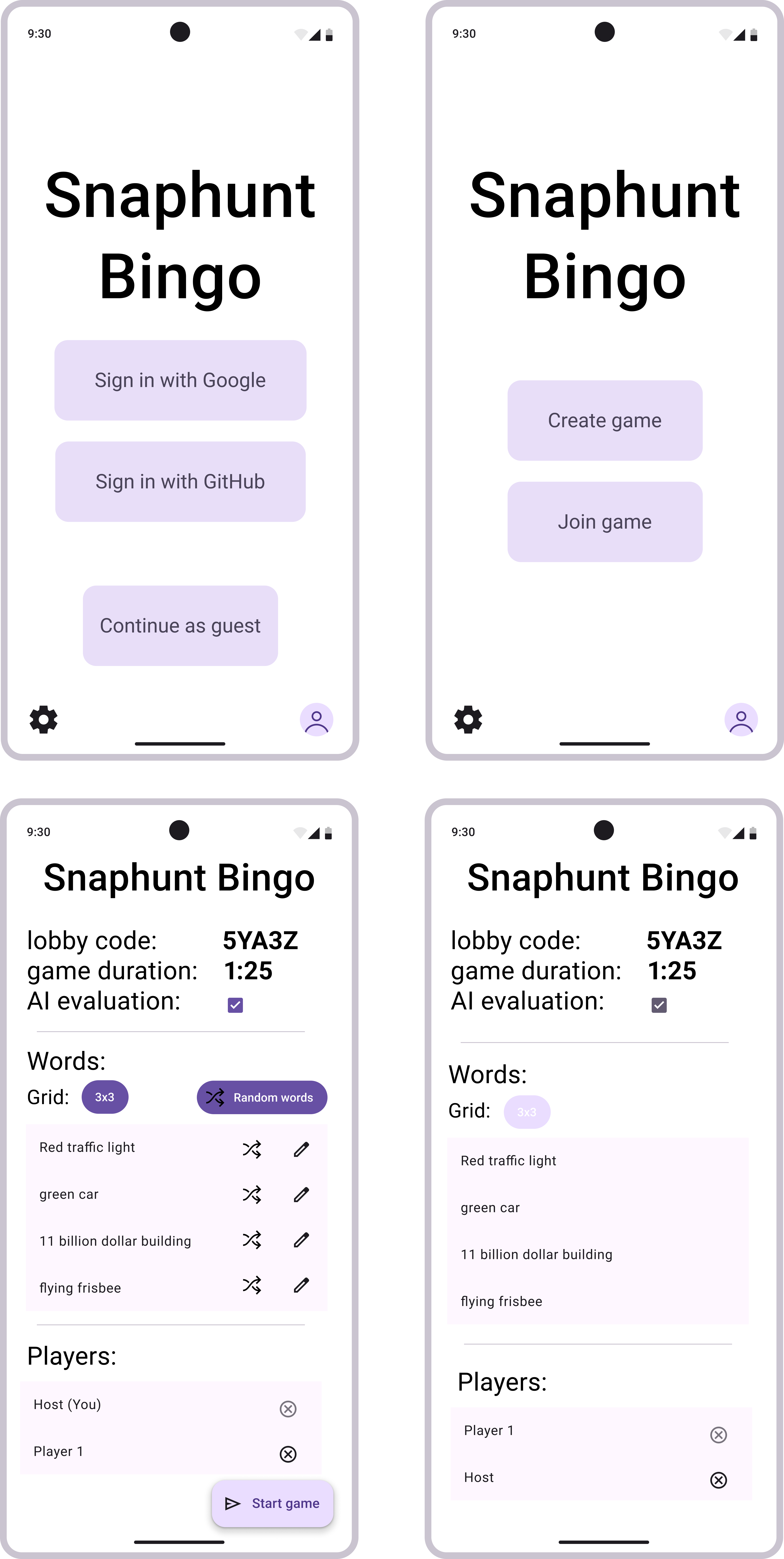

After some discussion with the team I created prettier versions of the screens with Figma:

Those designs are not final but they are a good foundation for discussion about the design and an inspiration for the development process.

They are also great to give an impression on how the game will look like and how it works to our readers.

Team structure

To make our development process more efficient, we split our team into two parts. Björn and Leon are responsible for the frontend section of SnapHunt Bingo while Abel and Erzan focus on the backend and AI integration of our project.

This separation of responsibilities allows each of us to concentrate on our strengths and ensures a clean and better structured development process.

If timing issues happen and the backend backlog begins to grow, Leon will also assist with backend tasks. This flexibility helps us keep the workflow steady, even when unexpected issues occur.

We manage our tasks in GitLab using separate issue tags for the frontend and backend. The team members can select the issues that they want to work on. To ensure that out project makes the desired progress we meet every Monday evening to discuss progress, upcoming tasks, and other open questions.

Frontend

We considered multiple framework options for our frontend development. After evaluation of our options we chose Flutter. We also thought about using React Native and after our mentoring professor suggested the Godot Engine, we also looked at that option.

In the end we decided on Flutter due to several reasons:

- Cross-platform support: With a single codebase, we can deploy our application on multiple platforms. Our primary platform will be Android, but if there is enough time we want to deploy our application in the web too.

- UI flexibility: Flutter has a very extensive widget library which enables us to easily build consistent and modern app designs without huge effort.

- Strong community: Flutter is still actively developed by Google and therefore has a big and active developer community, which help us to find solutions to issues we might come by.

While our other options also partially had those pros a key factor was that Björn already has some basic knowledge in Flutter, while the other two options would have been completely new to us.

We use JetBrains Android Studio as our main development environment for the frontend, as it integrates easily with Flutter and provides us with an Android Emulator, which we will use for the first weeks to try our application.

Backend

The reason we decided to use Python in the backend was because we wanted to learn something new, as no one has any experience using Python as a backend language. The second and bigger reason on why we decided to use python, is because of the AI compatibility of Python. Python is the industry standard for AI development so choosing it was a no-brainer.

Django is the biggest Python backend framework and has a lot of built in features and libraries that would spare us a lot of development time. We discussed other options such as FastAPI and Flask due to some performance issues that might occur due to the way Django handles async functions.

In the end we decided for Django as our backend as a potential performance bottleneck should not occur for our use-case. And we will safe some time using the vast libraries.

The AI Problem

The last decision to be made is how to tackle the image identification.

We have to balance word-complexity, user-input-flexibility, accuracy, and computing power and that is something that needs more time to test and plan. If we let the users pick their own words and let the uploads be checked by the AI, we can not assure for the reliability of the results if the word is too obscure.

Talking about complexity, the words we provide can not be too complex but also shouldn’t be too boring. For example: A simple image classifier can identify a car. But there are a lot of cars on the street. So simply using “car” as a word would be a boring experience for the player but easy to reliably implement for us. Most simple image classifiers start to struggle if the complexity increases. If we provide “green car” as a bingo field, the image classifier might detect a non-green car next to a green bush as a false-positive which should be avoided.

There are two ways to ensure that the image classier returns a correct result:

A. We use a more powerful image classifier.

B. We train our own image classifier.

A has the problem that we will reach a computing bottleneck pretty fast as we have only limited hardware available for the MediaNight. A fully fledged LLM has a high reliability, but an answer can take up to 5 minutes depending on the hardware.

We currently tend to option B which has the problem that we have to carefully pick the words we use because the players should have unique experiences, playing multiple rounds without repeating words. So if we have a 5x5 grid resulting in 25 words, we should have around 250 words that are not prone to false positives. Which means we will spend a lot of time for training and fine tuning the model which might take a lot of time of the project.

Our primary goal in terms of AI integration is to provide a 3x3 grid with 9 selected words for the MediaNight that work reliable with an image classifier in the backend.